Unmanned marine systems are categorized into

two categories, one category is unmanned surface vehicles (USVs), and the other

is unmanned underwater vehicles (UUVs). When analyzing the method of data

depiction and presentation between the two, it is vastly different. For

underwater systems high bandwidth communication is often difficult, therefore

missions are usually preprogrammed and the systems often operate with an

increased level of autonomy. This makes the mission control aspect of these

systems relatively simple. For systems such as the Bluefin 21, the control

station and data processing system is a Windows based laptop loaded with the

company’s proprietary software (Bluefin Inc., 2015). For surface based systems

such as the Protector USV the mission control of the system is more similar to

an unmanned aerial system. When operating on the surface, weather, sea

conditions, ship traffic, and precision targeting are required in real time.

Having to remotely interpret the USV’s immediate environment requires complex

depiction and presentation strategies. Studying current systems used in data

depiction and presentation as well as being able to identify their shortfalls

will help engineers in the future develop new methods and techniques in the

realm of data depiction and presentations for unmanned systems in general.

Data Depiction and Presentation for the Protector

The Protector USV is an advanced surface

warfare platform that can be utilized for anti-terror/ force protection

missions, port security, and intelligence surveillance and reconnaissance. The

system consists of a 30ft inflatable rigid hull boat, an interchangeable

mission payload, and a two person command and control station that can be

either placed on a larger ship or on shore (Rafael LTD., 2010). In terms of

data depiction and presentation, the command and control station is broken down

into two separate functions. There is a vessel control station and a payload

control station. The mission payload for this system is very similar to UASs,

therefore, for the purpose of this study, understanding and studying the vessel

control station is paramount to the payload control. Controlling a surface

vessel is very difficult when there is no proprioceptive feedback being given

to the operator. In order to compensate for the lack of proprioceptive feedback,

the Protector has been designed with a higher degree of autonomy than most

other unmanned systems. The system features cameras, sensors, and algorithms

that pick optimal sea paths and account for weather, waves, and currents. The

system also has a dynamic stabilization capability as well as autonomous sense

and avoid capability. All these systems are overlaid and presented to the

vessel operator in a control station that is a replica of the ships actual

controls. There is not only a replica of the vessel’s control, but there is

also a display of an overhead map as well as a view from the safety camera

which is located similarly to wear the skipper would actually be sitting or

standing if they were on the vessel (IWeapons.com, 2007). The operator can either control the system in

a manual or semi-autonomous mode. During docking and precision maneuvers,

manual control is ideal. At high speeds in the open ocean, the semi-autonomous

method of control is better suited for the mission.

Data Depiction and Perception Challenges

One of the largest challenges for any

surfaced based marine system is the lack of situational awareness and proprioceptive

feedback. Compared to a manned surface vessel, the unmanned skipper receives

all feedback in the form of quantifiable numbers and sensor readings rather

than actual feedback like wind being blown through hair, boat movement, and

engine sound. Not being able to feel the waves under the boat, compounded with

poor visibility of the surface conditions during night operations or in dense

fog makes it more challenging to get the most out of the USVs performance. Not

having these physical cues makes piloting an unmanned surface vessels a complex

task, but there are a few methods to mitigate this challenge. One method is turning

to a computer system to add a high degree of automation that will off load some

environmental based decisions from the skipper, such as sea path selections and

stabilization. This helps to a degree during simple maneuvers, but in terms of

precision maneuvers or extreme weather and sea conditions, this solution does

not provide the best outcome (Gilat, 2012). Another tool used to help USV

skippers is training. Similar to pilots training to fly with just flight

instruments, conditioning USV skippers to learn how to effectivly turn

numerical data into a cognitive picture of the remote environment is essential

(Gilat, 2012). In order to aid in this process, I propose to include proprioceptive

feedback in the command and control station via full motion simulations.

Alternative Data Depiction and Presentation Strategies

In aviation, pilots that are training to fly

with only instruments first train on simple computer based simulators. Once the

pilot can gain the ability to cognitively process numerical and instrument data

into spatial awareness, they graduate to a full motion simulator. This helps

them become physically aware of the simulated environment as well as be able to

physically sense the aircrafts performance. The real time motion simulation

helps the pilot manage their control inputs as well as determine aircraft

health and performance. If engineers can create the same full motion feedback

for USV skippers, I feel that it would help bridge the gap from numerical

sensor data to real life spatial and environmental understanding. Not only

simulating the motion of the vessel, but being able to provide simulated wind

speed and direction, as well as engine sound would also help the skipper gain

clear understanding of the environment as well as the USVs performance. The key

to this system would be real time feedback. If there is just a few second delay

between the USVs actual movement and corresponding simulated movement of the

command and control station, the required control inputs to correct for environmental

influence would be too late to be effective. Another difficulty of this system

is the size and complexity of a full motion command and control station. This

system would be better suited for a land based control facility rather than a

ship based facility. If proper training and full motion feedback is designed

into the Protector, an unmanned skipper could manually control the vessel to

perform more complex and precise maneuvers as well as allow for operations in

more severe sea and weather conditions.

Increasing the complexity and simulated feedback

of an USV skipper’s control station is a decision that needs to be made based

on mission limitations and mission profiles. For a steady state harbor protection

mission that requires precise maneuvering around ships, docks, and the

shoreline, the full motion command and control station may increase the

Protectors effectiveness and allow more complex manually controlled missions.

In expeditionary missions, a mobile form factor and highly automated mission control

method may be a more effective solution. Either way, as a study in design,

engineering a full motion command and control station for something like the

Protector USV could also provide valuable insight for land based unmanned

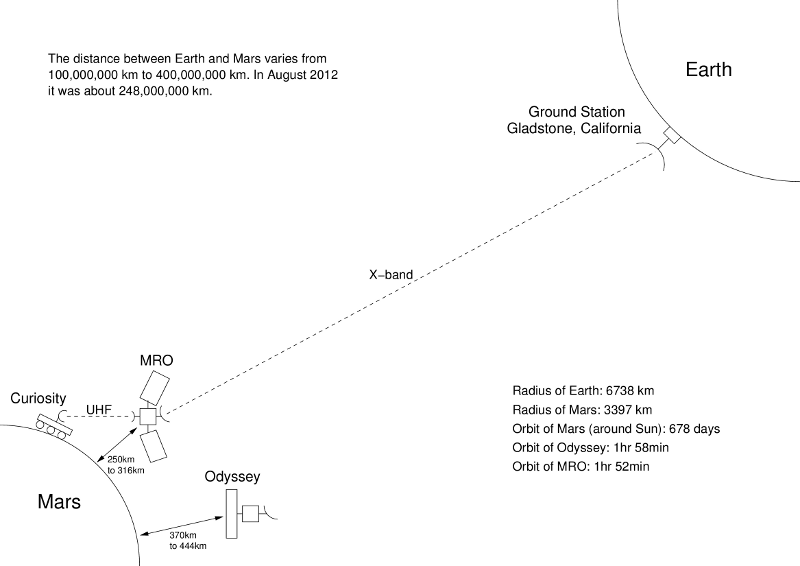

systems or even rover based systems that operator on the surface of Mars. The

most difficult aspect to this type of integration is the need for real time

data connections that could prove difficult over large distances. An emerging

methodology involving laser based communication could be the key to decreasing

data latency between vehicle and control station.

References

Bluefin Inc. (2015).

Operator Software » Bluefin Robotics. Retrieved February 21, 2015, from http://www.bluefinrobotics.com/technology/operator-software/

Gilat, E. (2012,

August 5). The Human Aspect of Unmanned Surface Vehicles. Retrieved February

21, 2015, from http://defense-update.com/20120805_human_aspects_of_usv.html#.VOYIWfnF9AU

Iweapons.com.

(2007, March 29). Protector. Retrieved February 21, 2015, from http://web.archive.org/web/20070329024450/http://www.israeli-weapons.com/weapons/naval/protector/Protector.html

Rafael LTD.

(2010). PROTECTOR: Unmanned Naval Patrol Vehicle. Retrieved February 21, 2015,

from http://www.rafael.co.il/Marketing/288-1037-en/Marketing.aspx